Business Intelligence

Big Data – The Genie is out of the Bottle!

Back in early 2011, me and other members of the Executive team at Ingres were taking a bet on the future of our company. We knew we needed to do something big and bold, so we decided to build what we thought the standard data platform would be in 5-7 years. A small minority of the team members did not believe this was possible and left, while the rest focused on making that happen. There were three strategic acquisitions to fill in the gaps on our Big Data platform. Today (as Actian), we have nearly achieved our goal. It was a leap of faith back then, but our vision turned out to be spot-on, and our gamble is paying off today.

My mailbox is filled daily with stories, seminars, white papers, etc., about Big Data. While it feels like this is becoming more mainstream, reading and hearing the various comments on the subject is interesting. They range from “It’s not real” and “It’s irrelevant” to “It can be transformational for your business” to “Without big data, there would be no <insert company name here>.”

What I continue to find amazing is hearing comments about big data being optional. It’s not – that genie has already been let out of the bottle. There are incredible opportunities for those companies that understand and embrace the potential. I like to tell people that big data can be their unfair advantage in business. Is that really the case? Let’s explore that assertion and find out.

We live in the age of the “Internet of Things.” Data about nearly everything is everywhere, and the tools to correlate that data to gain an understanding of so many things (activities, relationships, likes and dislikes, etc.) With smart devices that enable mobile computing, we have the extra dimension of location. And, with new technologies such as Graph Databases (based on SPARQL), graphic interfaces to analyze that data (such as Sigma), and identification technology such as Stylometry, it is getting easier to identify and correlate that data. Someday, this will feed into artificial intelligence, becoming a superpower for those who know how to leverage it effectively.

We are generating increasingly larger and larger volumes of data about everything we do and everything going on around us, and tools are evolving to make sense of that data better and faster than ever. Those organizations that perform the best analysis get the answers fastest and act on that insight quickly are more likely to win than organizations that look at a smaller slice of the world or adopt a “wait and see” posture. So, that seems like a significant advantage in my book. But is it an unfair advantage?

First, let’s remember that big data is just another tool. Like most tools, it has the potential for misuse and abuse. Whether a particular application is viewed as “good” or “bad” is dependent on the goals and perspective of the entity using the tool (which may be the polar opposite view of the groups of people targeted by those people or organizations). So, I will not attempt to judge the various use cases but rather present a few use cases and let you decide.

Scenario 1 – Sales Organization: What if you could understand what you were being told a prospect company needs and had a way to validate and refine that understanding? That’s half the battle in sales (budget, integration, and support / politics are other key hurdles). Data that helped you understand not only the actions of that organization (customers and industries, sales and purchases, gains and losses, etc.) but also the stakeholders’ and decision-makers’ goals, interests, and biases. This could provide a holistic view of the environment and allow you to provide a highly targeted offering, with messaging tailored to each individual. That is possible, and I’ll explain soon.

Scenario 2 – Hiring Organization: Many questions cannot be asked by a hiring manager. While I’m not an attorney, I would bet that State and Federal laws have not kept pace with technology. And while those laws vary state by state, there are likely loopholes allowing public records to be used. Moreover, implied data that is not officially considered could color the judgment of a hiring manager or organization. For instance, if you wanted to “get a feeling” that a candidate might fit in with the team or the culture of the organization or have interests and views that are aligned with or contrary to your own, you could look for personal internet activity that would provide a more accurate picture of that person’s interests.

Scenario 3 – Teacher / Professor: There are already sites in use to search for plagiarism in written documents, but what if you had a way to make an accurate determination about whether an original work was created by your student? There are people who, for a price, will do the work and write a paper for a student. So, what if you could not only determine that the paper was not written by your student but also determine who the likely author was?

Do some of these things seem impossible or at least implausible? Personally, I don’t believe so. Let’s start with the typical data that our credit card companies, banks, search engines, and social network sites already have related to us. Add to that the identified information available for purchase from marketing companies and various government agencies. That alone can provide a pretty comprehensive view of us. But there is so much more that’s available.

Consider the potential of gathering information from intelligent devices accessible through the Internet, your alarm and video monitoring system, etc. These are intended to be private data sources, but one thing history has taught us is that anything accessible is subject to unauthorized access and use (just think about the numerous recent credit card hacking incidents).

Even de-identified data (medical / health / prescription / insurance claim data is one major example), which receives much less protection and can often be purchased, could be correlated with a reasonably high degree of confidence to gain an understanding of other “private” aspects of your life. The key is to look for connections (websites, IP addresses, locations, businesses, people), things that are logically related (such as illnesses / treatments / prescriptions), and then accurately identify (stylometry looks at things like sentence complexity, function words, co-location of words, misspellings and misuse of words, etc. and will likely someday take into consideration things like idea density). It is nearly impossible to remain anonymous in the Age of Big Data.

There has been a paradigm shift regarding the practical application of data analysis, and the companies that understand this and embrace it will likely perform better than those that don’t. There are new ethical considerations that arise from this technology, and likely new laws and regulations as well. But for now, the race is on!

Profitability through Operational Efficiency

In my last post, I discussed the importance of proper pricing for profitability and success. As most people know, you increase profitability by increasing revenue and/or decreasing costs. However, cost reduction does not necessarily mean slashing headcount, wages, benefits, or other factors that often negatively affect morale and cascade negatively on quality and customer satisfaction. There is often a better way.

The best businesses generally focus on repeatability and reliability, realizing that the more you do something – the better you should get at doing it well. You develop a compelling selling story based on past successes, develop a solid reference base, and have identified the sweet spot from a pricing perspective. People keep buying what you are selling, and if your pricing is right, money is available at the end of the month to fund organic growth and operational efficiency efforts.

Finding ways to increase operational efficiency is the ideal way to reduce costs, but it takes time and effort. Sometimes this is realized through increases in experience and skill. But, often optimization occurs through standardization and automation. Developing a system that works well, consistently applying it, measuring and analyzing the results, and then making changes to improve the process. An added benefit is that this approach increases quality, making your offering even more attractive.

Metrics should be collected at a “work package” level or lower (e.g., task level), which means they are related tasks at the lowest level that produce a discrete deliverable. This project management concept works whether you are manufacturing something (although a Bill of Materials may be a better analogy in this segment), building something, or creating something. This allows you to accurately create and validate cost and time estimates. When analyzing work at this level of detail, it becomes easier to identify ways to simplify or automate the process.

When I had my company, we leveraged this approach to win more business with competitive fixed-price project bids that provided healthy profit margins for us while minimizing risk for our clients. Bigger profit margins allowed us to invest in our own growth and success by funding ongoing employee training and education, innovation efforts, and international expansion, as well as experimenting with new things (products, technology, methodology, etc.) that were fun and often taught us something valuable.

Those growth activities were only possible because we focused on doing everything as efficiently and effectively as possible, learning from everything we did – good and bad, and having a tangible way to measure and prove that we were constantly improving.

Think like a CEO, act like a COO, and measure like a CFO. Do this and make a real difference in your own business!

The Importance of Proper Pricing

Pricing is one of those things that can make or break a company. Doing it right takes an understanding of your business (cost structure and growth / profitability goals), the market, your competition, and more. Doing it wrong can mean the death of your business (fast or slow), the inability to attract and retain the best talent, as well as creating a situation where you will no longer have the opportunity to reach your full potential.

These problems apply to companies of all sizes – although large organizations are often better positioned to absorb the impact of bad pricing decisions or sustain an unprofitable business unit. Understanding all possible outcomes is an important aspect of pricing related to risk and risk tolerance.

When I started my consulting company in 1999, we planned to win business by pricing our services 10%-15% lower than the competition. It was a bad plan that didn’t work. Unfortunately, this approach is something you see all too often in businesses today.

We only began to grow after increasing our prices (about 10% more than the competition) and focused on justifying that with our expertise and the value provided. We were (correctly) perceived as a premium alternative, and that positioning helped us grow.

Several years ago, I had a management consulting engagement with a small software company. The business owner told me they were “an overnight success 10 years in the making.” His concern was that they might not be able to capitalize on recent successes, so he was looking for an outside opinion.

I analyzed his business, product, customers, and competition. His largest competitor is the industry leader in this space, and products from both companies were evenly matched from a feature perspective. My client’s product even had a few key features that were better for management and compliance in Healthcare and Union environments that his larger and more popular competitor lacked. So, why weren’t they growing faster?

I found that competition was priced 400% higher for the base product. When I asked the owner, he told me their goal was to be priced 75% – 80% less than the competition. He could not explain why he did this other than to state that he believed that his customers would be unwilling to pay any more than that. His lack of confidence in his product became evident to companies interested in his solution.

He often lost head-to-head competition against that competitor, but almost never on features. Areas of concern were generally the size and profitability of the company and the risk created by each for prospects considering his product.

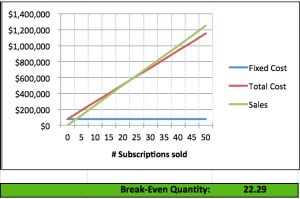

I shared the graph (below) with this person, explaining how proper pricing would increase their profitability and annual revenue and how both of those items would help provide customers and prospects with confidence. Moreover, this would allow the company to grow, eliminate single points of failure in key areas (Engineering and Customer Support), add features, and even spend money on marketing. Success breeds success!

In another example, I worked with the Product Manager of a large software company responsible for producing quarterly product package distributions. This work was outsourced, and each build cost approximately $50K. I asked, “What is the break-even point for each distribution?” That person replied, “There really isn’t a good way to tell.”

By the end of the day, I provided a Cost-Volume-Profit (CVP) analysis spreadsheet that showed the break-even point. Even more important, it showed the contribution margin and demonstrated there was very little operating leverage provided these products (i.e., they weren’t very profitable even if you sold many of them).

My recommendations included increasing prices (which could negatively impact sales), investing in fewer releases per year, or finding a more cost-effective way of releasing those products. Without this analysis their “business as usual” approach would have likely continued for several years.

Companies are in business to make money – pure and simple. Everything you do as a business owner or leader needs to be focused on growth. Growth is the result of a combination of factors, such as the uniqueness of the product or services provided, quality, reputation, efficiency, and repeatability. Many of these are the same factors that also drive profitability. Proper pricing can help predictably drive profitability, and having excess profits to invest can significantly impact growth.

Some customers and prospects will do everything possible to whittle your profit margins down to nothing. They are focused on their own short-term gain and not on the long-term risk created for their suppliers. Those same “frugal” companies expect to profit from their own business, so it is unreasonable to expect anything less from their suppliers.

My feeling is that “Not all business is good business,” so it is better to walk away from bad business in order to focus on the business that helps your company grow and be successful.

One of the best books on pricing I’ve ever found is “The Strategy and Tactics of Pricing: A Guide to Profitable Decision Making” by Thomas T. Nagle and Reed K. Holden. I recommend this extremely comprehensive and practical book to anyone responsible for pricing or with P&L responsibility within an organization. It addresses the many complexities of pricing and is truly an invaluable reference.

In a future post, I will write about the metrics I use to understand efficiency and profitability. Metrics can be your best friend when optimizing pricing and maximizing profitability. This can help you create a systematic approach to business that increases efficiency, consistency, and quality.

At my company we developed a system where we know how long common tasks would take to complete, and had efficiency factors for each consultant. This allowed us to create estimates based on the type of work and the people most likely to work on the task and fix-bid the work. Our bids were competitive, and even when we were the highest-priced bid we often won because we would be the only (or one of the few) companies to guarantee prices and results. Our level of effort estimates were +/- 4%, and that helped us maintain a 40%+ minimum gross margin for every project. This analytical approach helped our business double in revenue without doubling in size.

There are many causes of poor pricing, including a lack of understanding of cost structure; Lack of understanding of the value provided by a product or service; Lack of understanding of the level of effort to create, maintain, deliver, and improve a product or service; and Lack of concern for profitability (e.g., salespeople who are paid on the size of the deal, and not on margins or profitability).

But, with a little understanding and effort, you can make small adjustments to your pricing approach and models that can have a huge impact on your business’s bottom line.

To Measure is to Know

Lord William Thomson Kelvin was a pretty smart guy who lived in the 1800s. He didn’t get everything right (e.g., he supposedly stated, “X-rays will prove to be a hoax.”), but his success ratio was far better than most, so he possessed useful insight. I’m a fan of his quote, “If you can not measure it, you can not improve it.”

Business Intelligence (BI) systems can be very powerful, but only when embraced as a catalyst for change. What you often find in practice is that the systems are not actively used or do not track the “right” metrics (i.e., those that highlight something important – ideally something leading – that you have the ability to adjust and impact the results), or provide the right information – only too late to make a difference.

The goal of any business is to develop a profitable business model and execute extremely well. So, you need to have something people want, deliver high-quality goods and/or services, and finally make sure you can do that profitably (it’s amazing how many businesses fail to understand this last part). Developing a systematic approach that allows for repeatable success is extremely important. Pricing at a competitive level with a healthy profit margin provides the means for sustainable growth.

Every business is systemic in nature. Outputs from one area (such as a steady flow of qualified leads from Marketing) become inputs to another (Sales). Closed deals feed project teams, development teams, support teams, etc. Great jobs by those teams will generate referrals, expansion, and other growth – and the cycle continues. This is an important concept because problems or deficiencies in one area can negatively affect others.

Next, the understanding of cause and effect is important. For example, if your website is not getting traffic, is it because of poor search engine optimization or bad messaging and/or presentation? If people visit your website but don’t stay long, do you know what they are doing? Some formatting is better for printing than reading on a screen (such as multi-column pages), so people tend to print and go. And external links that do not open in a new window can hurt the “stickiness” of a website. Cause and effect are not always as simple as they seem, but having data on as many areas as possible will help you identify which ones are important.

When I had my company, we gathered metrics on everything. We even had “efficiency factors” for every Consultant. That helped with estimating, pricing, and scheduling. We would break work down into repeatable components for estimating purposes. Over time we found that our estimates ranged between 4% under and 5% over the actual time required for nearly every work package within a project. This allowed us to profitably fix bid projects, which in turn created confidence for new customers. Our pricing was lean (we usually came in about the middle of the pack from a price perspective, but a critical difference was that we could guarantee delivery at that price). More importantly, it allowed us to maintain a healthy profit margin to hire the best people, treat them well, invest in our business, and create sustainable profitability.

There are many standard metrics for all aspects of a business. Getting started can be as simple as creating sample data based on estimates, “working the model” with that data, and seeing if this provides additional insight into business processes. Then ask, “When and where could I have made a change to positively impact the results?” Keep working until you have something that seems to work, then gather real data and validate (or fix) the model. You don’t need fancy dashboards (yet). When getting started, it is best to focus on the data, not the flash.

Within a few days, it is often possible to identify and validate the Key Performance Indicators (KPIs) that are most relevant to your business. Then, start consistently gathering data, systematically analyzing it, and then work on presenting it in a way that is easy to understand and drill-into in a timely manner. To measure the right things really is to know.

Spurious Correlations – What they are and Why they Matter

In an earlier post, I mentioned that one of the big benefits of geospatial technology is its ability to show connections between complex and often disparate data sets. As you work with Big Data, you tend to see the value of these multi-layered and often multi-dimensional perspectives of a trend or event. While that can lead to incredible results, it can also lead to spurious data correlations.

First, let me state that I am not a Data Scientist or Statistician, and there are definitely people far more expert on this topic than myself. But, if you are like the majority of companies out there experimenting with geospatial and big data, it is likely that your company doesn’t have these experts on staff. So, a little awareness, understanding, and caution can go a long way in this scenario.

Before we dig into that more, let’s think about what your goal is:

- Do you want to be able to identify and understand a particular trend – reinforcing actions and/or behavior? –OR–

- Do you want to understand what triggers a specific event – initiating a specific behavior?

Both are important, but they are both different. My focus has been identifying trends so that you can leverage or exploit them for commercial gain. While that may sound a bit ominous, it is really what business is all about.

A popular saying goes, “Correlation does not imply causation.” A common example is that you may see many fire trucks for a large fire. There is a correlation, but it does not imply that fire trucks cause fires. Now, extending this analogy, let’s assume that the probability of a fire starting in a multi-tenant building in a major city is relatively high. Since it is a big city, it is likely that most of those apartments or condos have WiFi hotspots. A spurious correlation would be to imply that WiFi hotspots cause fires.

As you can see, there is definitely the potential to misunderstand the results of correlated data. A more logical analysis would lead you to see the relationships between the type of building (multi-tenant residential housing) and technology (WiFi) or income (middle-class or higher). Taking the next step to understand the findings, rather than accepting them at face value, is very important.

Once you have what looks to be an interesting correlation, there are many fun and interesting things you can do to validate, refine, or refute your hypothesis. It is likely that even without high-caliber data experts and specialists, you will be able to identify correlations and trends that can provide you and your company with a competitive advantage. Don’t let the potential complexity become an excuse for not getting started. As you can see, gaining insight and creating value with a little effort and simple analysis is possible.

- ← Previous

- 1

- 2

- 3

- Next →