Selling

Shouldn’t Sales Forecasting be Easy? What about Accuracy?

I’m sure that everyone has read articles that state some “facts” for managing your “sales pipeline” or “sales funnel.” Things like needing 10x-30x of your goal at the start of the process, down to needing 2x-3x coverage at the start of a quarter to help increase your odds of achieving your goal. Now, if it was only that easy…

First, what are you measuring? The answer to this question is something that anyone with a sales quota should be able to succinctly answer. For example, are you measuring?

- Bookings – Finalized Sales Orders

- What happens when Sales Operations, Finance, or Legal push back on a deal? You have a PO, but has the deal really been closed?

- Billings – Invoicing Completed

- This includes dependencies that have the potential to introduce delays that may be unexpected and/or outside of your control.

- Revenue – An in-depth understanding of Revenue Recognition rules is key.

- How much revenue is recognized and when it is recognized varies based on a variety of factors, such as:

- Is revenue Accrued or Deferred? This is especially key for multi-year prepaid deals.

- Is revenue recognized all at once – such as for the sale of Perpetual Software Licenses? (even this is not always black and white)

- Is revenue recognized over time – such as with annual subscriptions that are ratable on a monthly basis?

- Is revenue based on work completed / percentage of completion? This is more common with Services and Construction. Combining contracts, such as selling custom consulting services with a new product license, can complicate this.

- Are there clauses in a non-standard agreement that will negatively affect revenue recognition? This is where your Legal team becomes an invaluable contributor to your success.

- Cash Flow – Is this really Sales forecasting?

- The answer is ‘no’ in terms of Accounting rules and guidance.

- But, if you have a start-up or small business, this can be key to “keeping the lights on,” in which case the types of deals and their structure will be biased towards cash flow enhancement and/or goals.

- How much revenue is recognized and when it is recognized varies based on a variety of factors, such as:

My advice is to work closely with your CFO, Finance Team, Sales Operations Team, and Legal team to understand the goals and guidelines and then take that one step further to create policies that are approved by those stakeholders and are then shared with the Sales team to avoid any ambiguity around process and expectations.

So, now the hard part is over, right?

It could be that easy if you only have one well-established product, a stable install base, and no real competitive threats, where the rate of growth or decline is on a steady and predictable path and where pricing and average deal sizes are consistent. I have not seen a business like that yet, but I would have to believe that at least a few exist.

It could be that easy if you only have one well-established product, a stable install base, and no real competitive threats, where the rate of growth or decline is on a steady and predictable path and where pricing and average deal sizes are consistent. I have not seen a business like that yet, but I would have to believe that at least a few exist.

Next, what are you building into your model to maximize accuracy? Every product or service offered may be driven by independent factors, so a flat model that evenly distributes sales over time (monthly or quarterly) is just begging to be inaccurate. For example:

- One product line that sells perpetual licenses may depend on release cycles every 18-36 months.

- A second product line may be driven mainly by renewals and expansion on fairly stable timelines and billings.

- A third product line may be new with no track record and in a competitive space – meaning that even the best projections will be speculative.

- Finally, services could be associated with each product line and driven by more dependent and independent factors (new implementations, upgrades, implementing new features, platform changes and modernization, routine engagements, training, etc.)

Historical trends are one important factor to consider, especially because they tend to be the things you have the greatest control over. This starts with high-level sales conversion rates and goes down to average sales cycle, seasonal trends, organic growth rates, churn rates, and more. Having accurate data over time that can be accurately correlated is extremely helpful. But factors such as Product SKU changes, licensing model changes, new product bundles, etc., increase the complexity of that effort and potentially decrease the accuracy of your results.

Correlating those trends to external factors, such as overall growth of the market, relative growth of competitors, economic indicators, corporate indicators (profits, earns per share, distributions, various ratios, ratings, etc.), commodity and futures prices (especially if you install base tends to skew towards something like the Petroleum Industry), specific events, and so forth can be a great sanity check.

The best case is that those correlations increase your forecasting accuracy for the entire year. In all likelihood, they provide valuable inputs that allow you to dynamically adjust sales plans as needed to ensure overall success. But, making those changes should not be done in a vacuum, and communicating the potential need for changes like that should be done at the earliest point where you have a fair degree of confidence that change is needed.

There will always be unexpected events that negatively impact your plans. Changes to staffing or the competitive landscape, reputational changes, economic changes, etc., can all occur quickly and with “little notice.” That is especially true if you are not actively looking for those subtle indicators (leading and trailing) and nuances that place a spotlight on potential problems and give you time to do as much as possible to proactively address them. Be prepared and have a contingency plan!

Forecasting accuracy drives confidence, which leads to the ability to do things like getting funding for new campaigns or initiatives. Surprises, even positive ones, are generally disliked simply because the results are different than the expectations, which begins to fuel other doubts and concerns.

Confidence comes from understanding, good planning, helping everyone with a quota, and the teams supporting them to do what is needed when it is needed to optimize the process and then to have an effective approach to determine whether deals really are on track or not so that you can provide guidance and assistance before it is too late.

It may not be easy, but it is the thing that helps drive companies to the next level on a sustainable growth trajectory. In the end, that matters the most to the stakeholders of any business.

As an aside, myriad rules, regulations, and guidance statements are provided by various sources that apply to each business scenario. I am neither an Accountant nor an Attorney, so consult with the appropriate people within your organization or industry as part of your routine due diligence.

Good Article on Why AI Projects Fail

Today I ran across this very good article as it focused on lessons learned, which potentially helps everyone interested in these topics. It contained a good mix of problems at a non-technical level.

Below is the link to the article and commentary on the Top 3 items listed from my perspective.

https://www.cio.com/article/3429177/6-reasons-why-ai-projects-fail.html

Item #1:

The article discusses how the “problem” being evaluated was misstated using technical terms. At least some of these efforts are conducted “in a vacuum.” Given the cost and strategic importance of getting these early-adopter AI projects right, that was a surprise.

In Sales and Marketing, you start the question, “What problem are we trying to solve?” and evolve that to, “How would customers or prospects describe this problem in their own words?” Without that understanding, you can neither initially vet the solution nor quickly qualify the need for your solution when speaking with those customers or prospects. That leaves room for error when transitioning from strategy to execution.

Increased collaboration with Business would likely have helped. This was touched on at the end of the article under “Cultural challenges,” but the importance seemed to be downplayed. Lessons learned are valuable – especially when you are able to learn from the mistakes of others. This should have been called out early as a major lesson learned.

Item #2:

This second area had to do with the perspective of the data, whether that was the angle of the subject in photographs (overhead from a drone vs horizontal from the shoreline) or the type of customer data evaluated (such as from a single source) used to train the ML algorithm.

That was interesting because assumptions may have played a part in overlooking other aspects of the problem, or the teams may have been overly confident about obtaining the correct results using the data available. In the examples cited, those teams figured out those problems and took corrective action. A follow-up article describing the process used to determine the root cause in each case would be very interesting.

As an aside, from my perspective, this is why Explainable AI is so important. Sometimes, you just don’t know what you don’t know (the unknown unknowns). Understanding why and on what the AI is basing its decisions should help with providing better quality curated data up-front, as well as identifying potential drifts in the wrong direction while it is still early enough to make corrections without impacting deadlines or deliverables.

Item #3:

This didn’t surprise me but should be a cause for concern as advances are made at faster rates, and potentially less validation is made as organizations race to be first to market with some AI-based competitive advantage. The last paragraph under ‘Training data bias’ stated that based on a PWC survey, “only 25 percent of respondents said they would prioritize the ethical implications of an AI solution before implementing it.”

Bonus Item:

The discussion about the value of unstructured data was very interesting, especially when you consider:

- The potential for NLU (natural language understanding) products in conjunction with ML and AI.

- This is a great NLU-pipeline diagram from North Side Inc. in Canada, one of the pioneers in this space.

- The importance of semantic data analysis relative to any ML effort.

- The incredible value that products like MarkLogic’s database or Franz’s AllegroGraph provide over standard Analytics Database products.

- I personally believe that the biggest exception to this assertion will be from GPU databases (like OmniSci) that easily handle streaming data, can accomplish extreme computational feats well beyond those of traditional CPU-based products, and have geospatial capabilities that provide an additional dimension of insight to the problem being solved.

Update: This is a link to a related article that discusses trends in areas of implementation, important considerations, and the potential ROI of AI projects: https://www.fastcompany.com/90387050/reduce-the-hype-and-find-a-plan-how-to-adopt-an-ai-strategy

This is definitely an exciting space that will experience significant growth over the next 3-5 years. The more information, experiences, and lessons learned are shared, the better it will be for everyone.

Creating Customers for Life (4 minute read)

The goal for any business, regardless of the products you sell or the services you provide, should be maintaining a satisfied customer base that is loyal to your business. The idea is to create a mutually beneficial relationship that motivates people to want to continue working with you despite the availability of competitive products or motivations (e.g., those pushing for a “Corporate Standard” involving another product.)

The best part is that this concept applies to all companies and all Product Life Cycle stages. Whether your company is on a rapid growth trajectory towards ‘Unicorn status,’ your offerings are mature and viewed as ‘less exciting.’ The approach will also help if your products decline and you seek the ‘longest tail’ possible. At each phase, there are credible threats from competitors that seek to grow through the erosion of your business.

Several years ago, I was responsible for two product lines in two major geographic regions (Americas and APAC/Japan). Our attrition rate (“churn”) was traditionally slightly below the industry average. We began seeing an increase in churn and a corresponding decrease in organic growth. Both were indicators that something needed to change.

After discussing tactical approaches to address this, our small leadership team agreed that this was a strategic issue we needed to address. The result was an understanding that we needed to create ‘Customers for Life.’ Everyone agreed with the concept, but due to various differences (culture, who our customer was – end customer vs. channel partner, buying patterns, etc.), we agreed to try what was best for each of our regional businesses and share the results and lessons learned.

My approach focused on developing strong relationships that fostered collaboration and ultimately led to growth and success for both parties. The basic premise was simple:

- People tend to buy from people they like, respect, and trust. Become one of those people for your customers.

- Helping companies achieve better business outcomes leads to greater success for our customers and us.

How did we do it? It was a systematic process that my team used that included the following:

- Develop simple profiles for each customer (e.g., products used, date of first purchase, size of footprint, usage and payment trends, industry).

- An optimal size – based on the size of the product footprint, annual amount spent with us, or size of the company- was used to prioritize companies and organizations with the most significant potential impact.

- Make contact multiple times yearly, not just when you want money.

- These “out of cycle” contacts became very important.

- Ask questions about key initiatives, milestones, and concerns.

- We documented the responses, which helped seed following conversations and demonstrate a genuine interest in what they were doing.

- Follow-up!

- Request meetings to understand how they use our products and get a brief update on what our company has been doing.

- Meeting people face-to-face is always good.

- Learning more about their business, systems, goals, and challenges created opportunities to add value and become more of a partner in success with that customer.

- Look at what they were doing with our products and offer suggestions to do more, do something better or more efficiently, call out potential problems and offer suggestions, and discuss best practices. Often, we would have a technical expert follow up and provide an hour or two of free assistance relating to those findings.

- Look for opportunities to congratulate them.

- It demonstrates that they are important enough that you are paying attention. Google Alerts made this easy.

- Regularly ask our customers if there is anything that we could do to help them.

- They would often reciprocate, leading to increased references and referrals.

- Continuous Improvement – Analyze the results and refine the process as needed on an ongoing basis.

Before the meeting, we would spend an hour or two researching the company, its history, and significant events for it and within its industry, and identify its top 2-3 competitors. My consulting background came in handy as I “looked between the lines” to better understand the situation as we planned the meeting, focusing on what we wanted to walk away with and what we wanted the customer to walk away with from that meeting.

As we met with our Customers and Channel Partners, we would explain what ‘Customer for Life’ meant to us and the potential benefits to them. Before the meeting, we would check to see if we had (or they wanted) an NDA so they could speak freely and with confidence. Trust was important. The information disclosed would help us understand their situation, and we would map this against other customers in search of actionable trends. Showing interest and understanding created credibility. Asking relevant questions allowed conversations to progress to substantive issues in less time. From there, we focused on specific points that would positively impact that customer.

Over the course of two years, my team and I helped our customers innovate by providing different perspectives and ideas, modernizing (e.g., moving to spatial analytics to get a more granular understanding of their own business, cloud-enable their systems to increase responsiveness to their business and often control costs), improve their systems and grow their businesses, and more. We also received feedback that helped us improve our products and a variety of processes – something that benefitted all customers. Occasionally, we learned about problems they were having. We took ownership of the issue, brought in the right people, and helped the customer find a resolution. Collaboration and success created strong relationships with many customers – especially in the segment with the largest customers and companies.

From a business perspective, our customer churn decreased by 50% over the same period, and organic growth increased slightly more than 20%. We had achieved our objectives and improved our bottom line. The concepts behind Strategic Account Management, Voice of the Customer, Customer Experience, Customer Loyalty, and Customer Success had blended into a manageable practical approach and provided a great ROI.

One of my biggest lessons learned was that adopting this mindset and creating a repeatable process should be started sooner rather than later.

Every day you are not creating your own ‘Customers for Life,’ there is a good chance that your competition is. Don’t let that happen to your business.

Edit: Added category and tags

The Downside of Easy (or, the Upside of a Good Challenge)

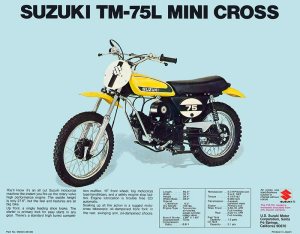

As a young boy, I was “that kid” who would take everything apart, often leaving a formerly functional alarm clock in a hundred pieces in a shoe box. I loved figuring out how things worked and how components worked together as a system. When I was 10, I spent one winter completely disassembling and reassembling my Suzuki TM75 motorcycle in my bedroom (my parents must have had so much more patience and understanding than I do as a parent). It was rebuilt by spring and ran like a champ. Beginners luck?

By then, I was hooked – I enjoyed working with my hands and fixing things. That was a valuable skill to have while growing up, as it provided an income and led to the first company I started at the age of 18. There was always a fair degree of trial and error involved with learning, but experience and experimentation led to simplification and standardization. That became the hallmark of the programs I wrote, and later, the application systems I designed and developed. It is a trait that has served me well over the years.

Today, I still enjoy doing many things myself, especially if I can spend a little time and save hundreds of dollars (which I usually invest in more tools). Finding examples and tutorials on YouTube is usually easy, and after watching a few videos for reference, the task is generally manageable. There is also a sense of satisfaction that comes with a job well done. And most of all, it is a great distraction from everything else that keeps your mind racing at 100 mph.

My wife’s 2011 Nissan Maxima needed a Cabin Air Filter, and instead of paying $80 again to have this done, I decided to do it myself. I purchased the filter for $15 and was ready to go. This shouldn’t take more than 5 or 10 minutes. I went to YouTube to find a video, but no luck. Then, I started searching various forums for guidance. There were plenty of posts complaining about the cost of replacement, but not much about how to do the work. I finally found a post that showed where the filter door was. I could already begin to feel that sense of accomplishment I was expecting in the next few minutes.

But fate and apparently a few sadistic Nissan Engineers had other plans. First, you needed to be a contortionist in order to reach the filter once the door was removed. Then, the old filter was nearly impossible to remove. And then, once the old filter was removed, I realized that the width of the filter entry slot was approximately 50% of the width of the filter. Man, what a horrible design!

A few fruitless Google searches later, I was more determined than ever to make this work. I tried several things and ultimately found a way to fold the filter where it was small enough to get through the door and would fully open once released. A few minutes later, I was finally savoring my victory over that hellish filter change.

This experience brought back memories of “the old days.” In 1989, I was working for a marketing company as a Systems Analyst and was assigned the project to create the “Mitsubishi Bucks” salesperson incentive program. Salespeople would earn points for sales and could later redeem those points on Mitsubishi Electronics products. It was a very popular and successful incentive program.

Creating the forms and reports was straightforward, but tracking the points (which included generating past reports and adjusting activity from previous periods) presented a problem. I finally considered how a banking system would work (remember, there were no books on the topic before the Internet, so this was essentially reinventing the wheel) and designed my own. It was very exciting and rock solid. Statements could be accurately reproduced at any time, and an audit trail was maintained for all activity.

Next, I needed to create validation processes and a fraud detection system for incoming data. This was rock solid, but instead of being a good thing, it became a real headache and source of frustration.

Salespeople would not always provide complete information, might have sloppy penmanship, or engage in other legitimate but unusual practices (such as bundling and adjusting prices among items in the bundle). Despite that, they expected immediate rewards, and having their submissions rejected apparently created more frustration than incentive.

So, I was instructed to turn the fraud detection dial way back. I let everyone know that while this would minimize rejections, it would increase the potential for fraud and the volume of rewards. I created a few reports to identify potentially fraudulent activity. It was amazing how creative people could be when trying to cheat the system, and how you could quickly identify patterns based on similar types of activities. By the third month, the system was trouble-free.

It was a great learning experience from beginning to end. It ran for several years after I left – something I know because I was still receiving the sample mailing with new sales promotions and “Spiffs” (sales incentives) every month. My later reflection made me wonder how many things are not being created or improved today because it is easier and less risky to follow an existing template.

We used to align fields and columns in byte order to minimize record size, overload operators, and other optimizations to maximize space utilization and performance. Our code was optimized for maximum efficiency because memory was scarce and processors were slow. Profiling and benchmarking programs brought you to the next level of performance. In a nutshell, you were forced to understand and become proficient with the technology used out of necessity. Today, these concepts have become somewhat of a lost art.

There are many upsides to being easy.

- My team sells more and closes deals faster because we make it easy for our customers to buy, implement, and start receiving value from the software we sell.

- Hobbyists like me can accomplish many tasks after watching just a short video or two.

- People are willing to try things they may not have tried before if getting started were not so easy.

However, there may also be downsides for innovation and continuous improvement, simply because ‘easy’ is often considered ‘good enough‘.

What will the impact be on human behavior once Artificial Intelligence (AI) becomes a reality and is in everyday use? It would be great to look ahead for 25, 50, or 100 years and see the full impact of emerging technologies, but my guess is that I will see many of the effects in my own lifetime.

It’s not Rocket Science – What you Measure Defines how People Behave

I previously wrote a post titled “To Measure is to Know.”

The other side of the coin is that what you measure defines how people behave. This is an often forgotten aspect of Business Intelligence, Compensation Plans, Performance reviews, and other key areas in business. While many people view this topic as “common sense,” based on the numerous incentive plans you run across as a consultant and compensation plans you submit as a Manager, that is not the case.

Is it wrong to have people respond by focusing on specific aspects of their job that they are being measured on? That is a tricky question. This simple answer is “sometimes.” This is ultimately the desired outcome of implementing specific KPIs (key performance indicators), OKRs (objectives and key results), MBOs (Management by Objectives), and CSAT (Customer Satisfaction), but it doesn’t always work. Let’s dig into this a bit deeper.

One prime example is something seemingly easy yet often anything but – Compensation Plans. When properly implemented, these plans drive organic business growth through increased sales, revenue, and profits (three related items that should be measured). This can also drive steady cash flow by closing deals faster and within specific periods (usually months or quarters) and focusing on models that create the desired revenue stream (e.g., perpetual license sales versus subscription license sales versus SaaS subscription sales). What could be better than that?

Successful salespeople focus on the areas of their comp plan where they have the greatest opportunity to make money. Presumably, they are selling the products or services that you want them to based on that plan. MBO and OKR goals can be incorporated into plans to drive toward positive outcomes that are important to the business, such as bringing on new reference accounts. Those are forward-looking goals that increase future (as opposed to immediate) revenue. In a perfect world, with perfect comp plans, these business goals are codified and supported by motivational financial incentives.

Some of the most successful salespeople are the ones who primarily care only about themselves (although not at the expense of their company or customers). They are in the game for one reason—to make money. Give them a well-constructed plan that allows them to win, and they will do so in a predictable manner. Paying large commission checks should be a goal for every business because properly constructed compensation plans mean their own business is prospering. It needs to be a win-win design.

However, suppose a salesperson has a poorly constructed plan. In that case, they will likely find ways to personally win with deals inconsistent with company growth goals (e.g., paying a commission based on deal size but not factoring in profitability and discounts). Even worse, give them a plan that doesn’t provide a chance to win, and the results will be uncertain at best.

Just as most tasks tend to expand to use all the time available, salespeople tend to book most of their deals at the end of whatever period is used. With quarterly payment cycles, most of the business tends to book in the final week or two of the quarter, which is not ideal from a cash flow perspective. Using shorter monthly periods may increase business overhead. Still, the potential to level out the flow of booked deals (and associated cash flow) from salespeople working harder for that immediate benefit will likely be a worthwhile tradeoff. I pushed for this change while running a business unit, and we began seeing positive results within the first two months.

What about motiving Services teams? What I did with my company was to provide quarterly bonuses based on overall company profitability and each individual’s contribution to our success that quarter. Most of our projects used task-oriented billing, where we billed 50% up-front and 50% at the time of the final deliverables. You needed to both start and complete a task within a quarter to maximize your personal financial contribution, so there was plenty of incentive to deliver and quickly move to the next task. As long as quality remains high, this is a good thing.

We also factored in salary costs (i.e., if you make more than you should be bringing in more value to the company), the cost of re-work, and non-financial items that were beneficial to the company. For example, writing a white paper, giving a presentation, helping others, or even providing formal documentation on lessons learned added business value and would be rewarded. Everyone was motivated to deliver quality work products in a timely manner, help each other, and do things that promoted the growth of the company. My company prospered, and my team made good money to make that happen. Another win-win scenario.

This approach worked very well for me and was continually validated over several years. It also fostered innovation because the team was always looking for ways to increase their value and earn more money. Many tools, processes, and procedures emerged from what would otherwise be routine engagements. Those tools and procedures increased efficiency, consistency, and quality. They also made it easier to onboard new employees and incorporate an outsourced team for larger projects.

Mistakes with comp plans can be costly – due to excessive payouts and/or because they are not generating the expected results. Backtesting is one form of validation as you build a plan. Short-term incentive programs are another. Remember, without some risk, there is usually little reward, so accept that some risk must be taken to find the point where the optimal behavior is fostered and then make plan adjustments accordingly.

It can be challenging and time-consuming to identify the right things to measure, the proper number of things (measuring too many or too few will likely fall short of goals), and provide the incentives to motivate people to do what you want and need. But, if you want your business to grow and be healthy, it must be done well.

This type of work isn’t rocket science and is well within everyone’s reach.